For the last few years, just before Christmas, I have compiled my reflections based on things that have cropped up in the year. I plan to continue doing this and keeping them all on this page.

Reflections 2025

The five core topics for my annual reflections are (in no particular order):

- Managers and technical experts dictating the agenda

- Not empowering people to do what is right

- Dismissing useful tools

- Not appreciating simplicity

- The rising cybersecurity threat.

Managers and technical experts dictating the agenda

Alarm management and procedures appear to be unrelated topics but we observe some similar issues. We generally have too many of them and they do a poor job of supporting people at work, which ironically is their whole purpose. Why does this happen?

I believe one reason is that people in influential positions assume that requirements can be defined solely through formal, technical sources. They believe that functional safety studies (such as HAZOP, LOPA and SIL Verification) identify the alarms that must be configured in the control system, and that regulations, international standards (e.g., ISO), and corporate policies determine what must be included in operating procedures.

The problem with this mindset is that it overlooks the impact on the operator. Every alarm is a potential distraction. Every procedure adds to workload. While alarms and procedures are essential, they are only effective when they are aligned with the operator’s needs, limitations and capabilities.

An alarm exists to tell an operator that prompt action is required. A procedure exists to help a competent person perform a task reliably and without error. When the agenda is driven purely by technical completeness or compliance, the result is often systems that are harder to operate, less intuitive, and may ultimately increase risk rather than reduce it.

Not empowering people to do what is right

Many people recognise that frontline work is not always well supported, yet when they are tasked with improving the situation, they often struggle to produce anything genuinely better. Part of the difficulty is that perfect solutions rarely exist; supporting safe and reliable operations requires accepting that every choice has consequences. Achieving an optimal compromise is really difficult.

A more fundamental issue is that people are not given the authority to make meaningful improvements. Organisations may want more usable systems but they put up barriers to changing the way things are done.

For example, operators may recognise that some alarms are unnecessary or a nuisance. But when they propose changes they are asked to argue the case using criteria that prioritise technical completeness and compliance over usability and operational value.

A similar pattern emerges when operators are asked to write procedures, often in a misguided approach to achieving end user involvement. Without guidance and support they naturally default to the existing style that uses technical detail, long explanations and engineering terminology. The result is a procedure that is just as difficult to use in practice as all the others, offering little improvement over what already exists.

In the end, these well-intentioned attempts at improvement simply reinforce the current system. We need to empower people to do what we all, deep down, know is right.

Dismissing useful tools

There are some very confusing messages circulating at the moment. On one hand, we are encouraged to learn from normal work and to value work as done as an important source of insight into effective practice. On the other hand, many of the tools that frontline teams and managers rely on (e.g. risk matrices, bowtie diagrams, even the Swiss cheese model) are criticised because they do not align perfectly with theoretical or academic principles.

Critics rarely offer practical alternatives. They often promote broad concepts or “ways of thinking” that may be technically sound but do not meet the everyday needs of users. People at the sharp end require methods that are understandable, quick to apply, and sufficiently accurate. They don’t need to be perfect, just good enough to support safe decisions in the real world of time and resource constraints.

Aiming for accuracy, complexity, and scientific rigour is commendable. But effectiveness usually relates to usability, communication, and efficiency. Tools that simplify reality are dismissed as unscientific, even though they consistently prove helpful in practice.

Every tool or model has limitations, but focusing solely on the technical flaws often ignores their real strengths. Ultimately, the value of a tool should be judged by how well it supports safety conversations and practical decision-making. Adding more rigour or complexity often undermines these benefits, making tools harder to understand, harder to communicate, and harder to use at the times when clarity and speed matter most.

Not appreciating simplicity

Trevor Kletz included simplicity in his principles for inherent safety. Simpler processes have fewer variables to manage and behave in a more transparent and predictable way. However, we often tolerate or even favour more complex solutions. We are easily persuaded that greater precision equates to greater efficiency or better control. In some cases, complexity is actively rewarded. Adding equipment, automation, or analytical sophistication can make us appear clever, while removing elements to simplify a system may be seen as taking the easy option.

Presenting a sophisticated analysis and use of the latest technology can make it easier to justify our approach. Simple solutions can appear unsophisticated or be viewed as a failure to exploit technological advances. Adding layers of control, alarms, and protection systems provides visible evidence that risks have been addressed.

It is true that some problems are inherently complex and over-simplified solutions will not be appropriate. However, treating simplicity as a positive design objective, and recognising complexity as a hazard to be actively managed should lead us to solutions that are technically sound, while also being easier to operate, maintain, and recover when things go wrong.

The rising cybersecurity threat

There is compelling evidence that cybersecurity risks are increasing. Part of this may be driven by heightened geopolitical conflict, but a more significant factor is the growing reliance on digital systems and the adoption of increasingly sophisticated algorithms, including those incorporating Artificial Intelligence (AI). These developments increase system complexity, with its associated risks, and many organisations are becoming so dependent on technology that they cannot function if it is unavailable, whether through technical failures or cyber-attack.

In process safety, we have traditionally taken comfort from the independence of safety systems. If the safety instrumented system is separate from the basic process control system, we often assume that cybersecurity is largely a control-system problem rather than a safety concern. We need to be vigilant to make sure technology does not erode this approach. Also, recent cases highlight that being safe is not good enough.

The forced shutdown of Jaguar Land Rover production in 2025 following a cyber-attack is a graphic example. The plant itself was clearly very safe, but it was unable to manufacture cars for over a month. Whether this reflects bad luck or poor practice is almost beside the point. It should be taken as a warning that our current approach to technology and risk is no longer adequate.

Ironically, technology is frequently introduced to replace work carried out by people we trust (our own employees), which makes systems more vulnerable to the actions of people whose intentions are malicious. This should prompt us to reconsider how technology is used.

Human factors has long advocated keeping people “in the loop” when introducing automation. However, being informed that something is going wrong is not the same as being able to intervene effectively. A stronger approach would be to keep people “in command”. In this model, technology can be used to support people to optimise performance, but those people are fully aware of what is happening and ready to intervene if the technology fails or suffers from malicious acts.

This is not an argument against the use of technology or AI. Rather, it is a call to fully understand the risks they introduce and to recognise that current safety approaches may be insufficient. While human limitations are often cited as justification for automation, recognising where human capabilities exceed those of technology can lead to more resilient designs.

To achieve this, decision-makers must be empowered to choose options that keep humans in command. Technology should become a tool that supports people in doing the work, rather than doing the work for them. We should favour simpler solutions wherever possible and explicitly recognise the significant risks introduced by complexity, including the magnifying effect of cybersecurity threats. This is also a call to the human factors community to prepare itself to take a more active and positive leadership role in shaping how technology is deployed in safety-critical systems.

I hope you enjoy reading my reflections of 2025 and that you have a happy and healthy 2026. You can access all my publications, previous annual reflections and other information at https://abrisk.co.uk/

Reflections 2024

Alarm management

The good news is that the 4th edition of EEMUA 191, for which I was the lead author, was published a couple of weeks ago. The main objectives of the rewrite were to enhance the guide’s structure, make it more concise, improve consistency, and provide practical resources. Additionally, updates to standards, including IEC 62682, were incorporated, and references to these standards were made more explicit.

I approached the rewrite with the understanding that the previous edition of EEMUA 191 was largely accurate but not always easy to follow. This lack of clarity sometimes made it challenging for companies to understand the steps required to manage alarms effectively.

One key message emphasised in the new edition is the importance of having a robust alarm philosophy document for each process facility. It should clearly define what constitutes an alarm and outline how the full lifecycle of an alarm system is managed. An example philosophy has been included in the guide to provide practical assistance.

Several changes have been introduced in the new edition to clarify how alarms, alerts, and operator prompts should be used to support operators. Some content in the previous edition confused these concepts, particularly for batch processes.

Another significant revision involves the terminology. The previous edition’s use of the term “safety-related alarms” was found to be confusing and more aligned to functional safety than alarm management. Instead, the concept of a Highly Managed Alarm, as defined in IEC 62682, has been adopted. This approach acknowledges that companies are concerned with more than just safety. The new edition also discusses how to account for alarms during functional safety reviews.

Updates to advice on audible indications have also been made. The earlier recommendation that alarms should be 10 decibels above background noise has been rejected, as this could startle personnel. It is now specified that alerts do not require an audible indication.

Guidance on alarm prioritisation has been revised, and the importance of conducting formal alarm rationalisation studies has been highlighted. An example rationalisation procedure is included to guide users through this process.

The guide itself is free to EEMUA members, and can be purchased by non-members athttps://www.eemua.org/products/publications/digital/eemua-publication-191

The presentation I gave at the EEMUA alarm management conference on the new guide is available for free at https://abrisk.co.uk/wp-content/uploads/2024/10/New-EEMUA-191-4th-Edition-Alarm-Management.pdf

Human factors role in supporting best practice

I presented a paper at the Ergonomics and Human Factors 2024 conference aimed at encouraging human factors practitioners to take a more active role in driving improvements in how work is carried out. It seems to me that the focus is often confined to the circumstances under which work is performed, which feels like a missed opportunity. Human factors, with its tools (e.g., task analysis) and deeper understanding of human behaviour, has the potential to contribute far more. Unfortunately, vocal “thought leaders” often distract from this potential by promoting catchy buzzwords that shift the agenda in unhelpful directions.

One common but misguided idea is that individuals who perform a task are automatically experts because they know how the task is performed in practice. While it is crucial for task practitioners to be actively involved in any analysis, labelling them as experts assumes that they instinctively know and adhere to best practices. In reality, people typically perform tasks the way they initially learned them, often in ways that are convenient at the time. My experience has shown that there are frequently opportunities to improve current practices by exploring recognised good practices and reverting to first principles of risk control.

Human factors studies should challenge task practitioners to explain why they perform tasks the way they do. A common reaction is to question the need for such scrutiny, often stating, “We’ve always done it this way, and there haven’t been any problems.” It’s important to recognise that before a major accident, the people working at a facility often did not perceive significant concerns about their practices. They may not have believed they were perfect, but they were comfortable enough to continue as they were.

You can view my paper at https://abrisk.co.uk/wp-content/uploads/2024/05/2024-CIEHF-HF-and-best-practice.pdf

Procedures

I have previously commented on procedures in my end-of-year reflections, but over the last 12 months, I have reached some clear conclusions. The two key messages I would like to share are:

- Write procedures for competent people.

- Be ruthless with wording, cutting anything that does not support the competent person.

I have much more to say on this subject, which you can explore in the paper I presented at this year’s Hazards conference. A significant update is that I have been contracted by the Centre for Chemical Process Safety (CCPS) to write a book on this topic, which is scheduled for publication in 2026.

View my hazards paper at https://abrisk.co.uk/wp-content/uploads/2024/11/2024-Haz-34-Perplexing-persitence-of-poor-procedures.pdf

An example of a procedure written in my suggested style is available at https://abrisk.co.uk/wp-content/uploads/2024/09/ABRISK-High-Criticality-Task-Procedure-Template-01.docx

Hierarchy of risk control

I shared ideas at this year’s Hazards conference on how the hierarchy of risk control could evolve from an interesting concept into a practical tool. I explored whether adding more detail might enable its use in evaluating risk control strategies, incorporating inherent safety principles, and supporting ALARP demonstrations.

When asked for a potential title for my paper, ChatGPT suggested: “Elevating the Risk Control Hierarchy from a Hammer to a Scalpel: Refining Its Edge for Precise Safety Manoeuvres.”

I have developed an example of an expanded hierarchy, which you can download from https://abrisk.co.uk/abrisk-expanded-hierarchy-of-risk-control/. I concluded that the hierarchy should be split into two sections, differentiating prevention from mitigation. Drawing on ideas from Trevor Kletz, I positioned inherent safety on both sides: “What you don’t have can’t leak” and “Simple systems are inherently safer” on the prevention side, and “People who are not there cannot be harmed” on the mitigation side.

One of the prompts for exploring this approach was the commonly held view, particularly among those in technical roles, that engineered risk controls are inherently good, while those relying on humans are bad because people are unreliable. I believe this perspective is unhelpful and only holds true if human reliability is assessed using metrics designed for machines.

Initially, my expanded hierarchy felt like a solution in search of a problem. However, I now see its potential, especially in cases where ALARP needs to be demonstrated. The additional categories of risk control types provide a useful prompt for considering further ways to reduce risk. They also encourage a more balanced perspective on how engineered and human risk controls can work together effectively.

I would be delighted to discuss these ideas with anyone interested in developing them further to create a practical and impactful tool.

You can download the paper at https://abrisk.co.uk/wp-content/uploads/2024/11/2024-Haz-34-Hierarchy-of-Risk-Controls.pdf

Fatigue management

When I was studying Chemical Engineering in the 1980s, I would never have guessed that human fatigue (rather than metallurgy) would one day fall within my remit. However, clients have asked me multiple times to review their shift patterns in relation to fatigue. While fatigue is a common topic of discussion, it is often considered in isolation. Although the term “fatigue risk” is frequently used, it makes little sense on its own. Fatigue can certainly contribute to risk, but it is only one of many factors. In fact, arrangements intended to manage fatigue could inadvertently increase other health and safety risks.

To address this gap, I have developed my own guidance. I explain how fatigue must be evaluated alongside other considerations, such as ensuring there are enough competent staff to meet safe staffing requirements and verifying that safety-critical communication is effective.

I have also created an example fatigue management procedure that companies can adopt and adapt to their circumstances. Notably, I’ve found that very few of my clients have effective procedures in place. One concern I have is that while there is a lot of discussion about fatigue, both researchers and companies seem reluctant to identify specific areas of concern.

When focusing on shift work, we have clear guidance on what is considered acceptable—for example, shift duration, the number of consecutive shifts, and the length of breaks. It’s straightforward to compare a shift pattern against this guidance to identify deviations. However, in my experience, employees often work hours or days outside of their scheduled shifts, and I rarely (if ever) see analyses to assess whether this is problematic.

Analysing hours worked is not difficult. Companies can set rules or guidelines about acceptable limits, download data on hours worked by individuals (and if such data isn’t available, they may not even be able to demonstrate compliance with working time regulations), and compare the results. To demonstrate how simple this process can be, I created an Excel spreadsheet using basic formulas and conditional formatting. Once set up, it becomes an easy task to review hours worked and flag potential concerns.

Download my fatigue guide from https://abrisk.co.uk/wp-content/uploads/2024/10/ABRisk-Fatigue-Management-guide.pdf

An example fatigue management procedure from https://abrisk.co.uk/wp-content/uploads/2024/10/ABRisk-Fatigue-Management-procedure.pdf

Spreadsheet for evaluating fatigue from hours actually worked https://abrisk.co.uk/wp-content/uploads/2024/10/Hours-worked-calcs.xlsx

Reflections 2023

Procedures

I see many operating or maintenance procedures each year, and they are generally quite poor. Even when I look at ones I have written for clients I can usually find issues. The reality is that procedures will never be perfect, so we should not continually strive for it. However, a significant number of the procedures I come across not only miss the mark of perfection but are genuinely lacking in both technical content and format.

One of the fundamental issues is lack of clarity about why we need procedures. Too often they are used as a mechanism to capture everything about a task, which invariably expands to include details about the plant and process. The result is a jumbled, wordy document that fails to fulfil its most important purpose, which is to support people to perform a task safely and efficiently.

One of my bugbears is the additional pages that are added in the preamble. Why we have to have a summary, introduction, scope, purpose, objective, justification, PPE summary etc. is not clear to me, but dealing with this has been a battle I have not yet won. Nevertheless, if a preamble is deemed necessary, it is crucial that it imparts valuable information.

First of all, be clear about the purpose, which I believe, is to support competent people when performing the task. Secondarily to support people to become competent (i.e. used during training and assessment).

Be clear about how the procedure is to be used in practice. If you expect it to be printed, followed and signed each time the task is performed then state that clearly at the front. But that is not appropriate for every task, only the most critical and complex. For other tasks the procedure may only be for reference, but again make say that is the case.

Procedures cannot be written to cover every eventuality. Blindly following a procedure is not safe. Make it clear that the task should only be carried out using the procedure if is safe to do so.

There also needs to be a process to deal with situations where the procedure cannot be followed as written. Simply stating that deviations need to be authorised is not a great help to people. Give options, that may include:

- If the task can be paused safely – stop, convene a meeting and generate a temporary procedure before proceeding;

- If pausing the task may create a hazard – agree how to proceed verbally and mark up the procedure to document how the task is performed in practice;

- If a hazardous situation has already occurred – do what you can to progress the task to a safe hold point, record what has been done and current status.

Other things that can useful in the preamble include a summary of the major hazards and associated controls, preconditions that define the staring point for the task and a summary of the main sections / sub-tasks, which is really useful for pre-task meetings (toolbox talk) and training.

I have a procedure template as a download on my website https://abrisk.co.uk/procedures/

Human factors assessments of critical tasks

New guidance was issued earlier this year from the Chartered Institute of Ergonomics and Human Factors (CIEHF) regard Safety Critical Task Analysis (SCTA). I was a member of the ‘Creative Team’ – the group who worked together over many Teams calls to draft the guide. Other members were Mark Benton, Lorraine Braben, Jamie Henderson, Paddy Kitching, Mark Sujan and Michael Wright. Pippa Brockington was our leader.

Members of the team agreed on many things, particularly around the methods used to prioritise tasks, the core role of Hierarchical Task Analysis (HTA) and approaches for identifying potential human failures. Also, we agreed on the set of competencies required by people who are assigned responsibility for carrying out analyses.

We had quite a lot of debate about recording human failure mechanisms (e.g. slips, lapses, mistake and violations). My experience is that including this in an assessment leads to prolonged debate that ultimately makes no significant difference to the outcome. People identify the most likely type of human failure, but we know that accidents usually occur due to several unlikely (but not impossible) events happening together. Also, it focusses attention on managing human failure rather managing risk overall. In practice people struggle to identify the mechanism and end up choosing one just so they have something written in a column in a table. This helps to perpetuate a perception that human factors is an academic or theoretical exercise, with limited practical benefit. The guide acknowledges different opinions on this subject and suggests that it is something to consider when identifying improvement actions, but does not suggest that the failure mechanisms need to be recorded.

Assessment of Performance Influencing Factors (PIFs) was another area of debate. This was largely because there has not been any guidance on the subject in the past and individual members of the Creative Team had developed their own approaches. Key messages in the guide are to make sure any reference to PIFs is specific to the task or step, avoiding vague comments about PIFs that could apply to any task. The challenge is that PIFs come in many different forms so anyone leading an analysis needs to have a broad understanding of human factors.

The guide from CIEHF is freely available at https://ergonomics.org.uk/resource/comah-guidance.html

Alarms

I reported last year that I was leading a review and update of EEMUA 191, the go to guide for alarm management. Well that is still the case, but I am pleased to say that the final draft is nearly complete. It will be published in 2024.

In parallel I have been discussing operator alarm response times Harvey Dearden. He had investigated the commonly received ‘wisdom’ that the minimum allowable operator response time for a safety alarm to act as an Independent Protection Layer (IPL) is of the order of 20-30 minutes. He felt that this was a very cautious approach and wondered if a shorter time could be justified.

Through discussion we concluded that there should be scope to reduce the assumed operator response time if it could be demonstrated that conditions were favourable, taking into account technical and human factors.

My motivation for exploring this subject is that it may drive fundamental improvements in system design especially if being able to take credit for alarm response results in:

- An incentive to improve alarm system design;

- Reduced use of safety instrumented systems that can be expensive to install and maintain, increase system complexity, which can be a source of risk.

We presented a paper on the subject at this year’s Hazards conference. You can download it at https://abrisk.co.uk/wp-content/uploads/2023/11/2023-Hazards-Jump-to-it-alarm-response-times.pdf

Human Factors Engineering (HFE) of construction and commissioning

HFE has been applied in major projects for a while but typically only considered the human factors of the Operate phase (ensuring the plant’s design will support safe and efficient performance of operations and maintenance tasks). Far less consideration has been given to the preceding construction and commissioning phases. The reason being that these usually involve a lot of one-off activities so the time at risk is relatively low. However, the risk created can be significant.

Nick Wise, Neil Ford and I presented a paper at the Hazards conference about HFE in projects. This was based on learning from subsurface well intervention projects at a gas storage facility. We found that that the temporary and dynamic nature of projects means that there is far greater reliance put on actions of people in manging risks during projects than would be accepted at a permanent operating site. The extensive use of specialist contractors has many advantages but inevitably leads to challenges due to cultural differences, attitudes to process safety, multiple work systems and approaches to managing risks. However, the operating company ultimately retains accountability for how risks are managed.

We found that a Human Factors Integration Plan was a good way of identifying issues arising during construction and commissioning, and creating a formal mechanism for developing effective solutions. Overall, it created a constructive forum to engage contractors, which improved everyone’s understanding of the risks and how they could be managed in practice.

The paper can be downloaded at https://abrisk.co.uk/wp-content/uploads/2023/11/2023-Hazards-HF-in-projects-at-operational-sites.pdf

Although this work was focussed on the risks between projects on an operating site it occurs to me that many of the issues will apply to the extensive decommissioning work being undertaken in offshore oil and gas sector. I have been involved in one project where we carried out SCTA for plugging and abandonment of wells, and removal of platform topsides. This proved to be a very useful way of achieving a shared understanding of what was planned, the risks and associated controls. I am sure there is a lot of benefit in including HFE in all decommissioning projects.

As an aside, I have shared a HFE specification that can be used to create a common understanding of design requirements in projects. Feel free to download and adapt for your own use https://abrisk.co.uk/human-factors-engineering

Leak testing when reinstating plant

Guidance was issued earlier this year by the Energy Institute about reinstatement of plant after intrusive maintenance. It seems to have been picked up by the offshore industry, more so than onshore. The guide makes it clear that ‘Service testing’ using the process fluid during plant reinstatement should be less common than has been the practice in the past. Whether intended or not, the guide has created a perception that full pressure reinstatement testing using an inert fluid (e.g. nitrogen) is now required. Whilst this approach reduces the risk of hydrocarbon releases during leak testing it is not inherently safe. Companies need to be evaluating all options for reinstatement, and not just assume that one method is safer than another.

Michelle Cormack and I presented a paper at the Hazards conference on this subject. It can be downloaded at https://abrisk.co.uk/wp-content/uploads/2023/11/2023-Hazards-leak-testing-reinstatement-more-complicated-than-you-think.pdf

Reflections 2022

Procedures

Most companies have procedures and most procedures (in my experience) are not very good. Guidance is available that at face value appears to support the development of better procedures but it tends to be focussed on form over function – following the guidance can give you procedures that look good at a superficial level but do not actually perform well in practice.

One of the problems is that procedures are written without any clear idea of why they are needed or how they are going to make a contribution. There are lots of reasons to write procedures but the two that I am most interested in are:

- Supporting competent people to carry out a task;

- Supporting training and assessment of people so that they can become competent in performing the task.

In some cases a single procedure can satisfy both of these requirements. In other cases two procedures may be required for the same task.

Whilst I accept that format and presentation does have some influence on how a procedure performs, it is the content that makes the biggest difference. One thing that people are not very clear about is the level of detail to include. It is often suggested that they should have enough information for the man or woman on the street. That is fine if your intention is to give tasks to people with no training or experience. In practice this will not and should not happen. My guide is to consider someone competent to perform a similar task at another location. For example, a process operator or maintenance technician moving from another site.

Another reason to avoid unnecessary detail in procedures is that they will never be 100% correct for every potential scenario where the task is performed. By recognising that people have to be competent to perform a task using a procedure we can see that using proven skills and exercising judgement should be supported by the procedure instead of trying to replace them with additional lines of text. Emergency procedures are good example. There is a danger that if we give people detailed procedures they will start to believe that is how every emergency will happen. Of course this is not the case.

Why do we need so much preamble to our procedures? I have been working with a client who had developed a new procedure template with input from consultants, technical authors and quality control. I am pleased to say that we have managed to reduce this to about two pages, which is probably a fair compromise.

Why do we need a date, time and signature against every step in a procedure? It is very common but does little more than cause suspicion about why it is considered necessary. It is fair to say that keeping track of progress within a procedure is important and useful to the person doing the task, but a simple tick box is more than enough. A record of when the task started and ended can be included if time and date is important, and possibly at interim stages if key hold points are identified.

Which leads me on to key hold points. In some tasks this can be critical but most it probably is not. I need to develop some criteria for deciding when they should be used, but I find that if a procedure is well structured, especially if based on a hierarchical task analysis, it is quite easy to identify the need.

Another item that is usually covered in the guidance is warnings before critical steps. However, I am not aware of any clear guidance about which steps require a warning. I don’t think I have ever seen warnings within procedures used well. I have seen some procedures where the warnings take up more space than the main task steps. I commonly see actions included in warnings that inevitably cause confusion.

I think I understand the idea of highlighting certain steps in the procedure. But what does this mean that steps that don’t have a warning are optional or can be performed carelessly? If that is the case why are those steps included in the procedure? My view is that if a task has been identified as critical enough to need a procedure then every step in that procedure has to be performed. The other issue I have is what should the warning say? Either you say that the step is important, and so imply that others are not. The other option usually results in simply describing the step in a different way. My strong preference is to ensure each step is described clearly and concisely so that additional explanation is not required. Overall, I return to my explanation above that procedures are there is support competent people. Part of that competence is knowing the hazards and how the risks are controlled. This is something that I believe should be in the preamble to the procedure.

I had intended to write an article on procedures to address the issues I routinely encounter. Unfortunately I have not found the time. However, I did stumble across an old article I wrote in 2008 that I had completely forgotten about. Looking back it actually addresses some of my concerns and so I am sharing it here. https://abrisk.co.uk/papers/2008%20Tips%20-%20Better%20Procedures.pdf

(I have had permission from the original publisher Indicator FLM to share it – https://www.indicator-flm.co.uk/en/)

Alarms

I am currently leading a review and update of EEMUA 191, the go to guide for alarm management. I am finding it a challenging but very interesting activity that is helping me to understand some of the issues around alarm management. I think the guide is currently generally ‘correct’ and has the answers to most of the questions. But there are some gaps and contradictions that I hope we are able to resolve.

One area of confusion for me has been ‘Safety Related’ and ‘Highly Managed’ alarms. I could not decide if they were the same thing or fundamentally different, or whether one was a subset of the other. They are terms that have come from IEC 61508 and IEC 62682. The largely numerical explanation for safety related may be useful in LOPA studies but less so for general alarm management. The definition of high managed seems to tell us more about what to do with them rather than how to identify them in the first place.

From discussion it appears that Highly Managed Alarms (HMA) is the preferred term and they should be identified for situations that rely on human actions to avoid significant consequences because there is no other control or barrier; or because the integrity of the controls or barriers is less than required. They are situations where process operators are our last line of defence.

I have tested this with a few clients recently from different sectors and have been pleasantly surprised by how useful it has been. It has shown that clarifying the nature of the event can be particularly useful so we can have HMA for safety (HMA-S), the environment (HMA-E), commercial threats (HMA-C), assets (HMA-A) and even quality (HMA-Q). Whilst it is tempting to suggest that safety has to be the main priority so the others should be disregarded, the fact is that all of these threats are important to any business and so influence the operators’ behaviours.

Similarly, and at the other end of the scale, a better understanding and use of Alerts is proving to be very useful. These are the events that do not require an operator response to avoid a consequence, so do not satisfy the definition of alarm, but may still be useful for situational awareness of operators or others. They provide a very useful solution in alarm rationalisation when people feel uncomfortable about deleting alarms. Also, they provide the opportunity to direct this information to other groups, so reducing operator workload.

Alerts can be useful and should not be ignored. The main difference is that we expect operators to monitor alarms continuously and respond immediately. But they only need to review alerts periodically (at the start and end of shift, and every couple of hours in between). Alerts that other groups can deal with can be captured in reports that may be sent at the end of each day so that work the following day can be planned.

Hopefully the new edition of EEMUA 191 will be published in 2023. Watch this space…

Emergency exercises

I have been concerned that companies are not carrying giving people enough opportunity to practice emergency response. They may organise one big exercise every couple of years, usually involving emergency services. But I didn’t think that was enough. It seemed that the problem was that these exercises were a drain on resources and were not leaving people enough time to do anything else. I have tried to encourage the use of regular table top exercises. Simple activities requiring minimal resource that can give everyone who may have to deal with an emergency one day to talk through what may happen and what they would need to do.

It has been great this year to talk with clients that have been supporting their operating and emergency response teams with frequent table top exercises. It appears to me that these companies stand out from the others in many aspects. I can’t prove this but my conclusion is that making sure people take time to pause their normal work to talk about what can go wrong leads them to have a better appreciation of hazards and controls. Whether this is a direct result of the exercises themselves or just that companies that can manage this are just better at managing everything is not clear and does not really matter.

It is worth noting that the group of people required to deal with the early stages of any incident is usually the process operators. They usually work shifts, so the table top exercises have to occur frequently to make sure everyone has the chance to take part. Weekly may be a bit excessive but if this is stretched to monthly it is entirely possible that individuals may not take in part in one for more than a year.

Proof Testing Safety Instrumented Functions

Papers (co-authored with Nick Wise and Harvey Dearden) swere prompted from work with various clients where carrying out task analyses of proof testing showed that it is more complicated that it appears and often the methods being used fall short of where they need to be.

See my page on the subject of SIF testing here.

Reflections 2021

COVID-19

It’s still with us and unfortunately Omicron has ramped it up again. When I wrote my reflections a year ago I really did not think it would still be top of my list of issues. Personally, it hasn’t affected me too much. I have not (knowingly at least) caught the virus. Our eldest son was supposed to be coming home from university today but got a positive lateral flow earlier in the week so has had to delay his plans. He has no symptoms and luckily should be clear early next week and will make it home for Christmas.

I have kept busy with work. Lots of Teams meetings, which are generally working very well. In some cases, remote workshops are working better than face-to-face. Task analysis is a good example because it is allowing more and different people to attend workshops and is less disruptive at the client end because it can be organised in a more flexible way. The task Walk-Through and Talk-Through on site is still very important but can be done as a separate activity when COVID restrictions allow.

The reporting on the pandemic has highlighted to me the problems we have with data in general. The problem is that as soon as numbers are presented as part of an argument or explanation we start to believe that they give us an answer. That can be the case if we are sure that the data is truly representative and applicable to the question that is posed. I don’t know about the rest of the world but in the UK the number of positive test results has been continuously reported as evidence of the pandemic getting better or worse but most of the time appears to be most influence by the number of tests carried out. If I am generous I would say this is just lazy reporting of headline numbers with no attempt to contextualise the meaning. I guess the same often happens when we are presented with quantified safety analyses. We are given numbers that give a simple answer to what is normally a far more complex question.

Another observation that has parallels with my human factors work is not taking human behaviour into account when deciding what controls to implement. A particular example is face coverings. I have seen several lab studies showing how they can stop droplets that may be expelled when you cough, sneeze or even sing. But that seems to have very little relevance to the real world. I know I am not alone in using the same face covering all day, putting it on and taking it off multiple times, and adjusting it the whole time I am wearing it. I don’t know how these behaviours affect the effectiveness of face coverings but I would have liked to see some results from real life applications instead just the lab. At a risk of contradicting my rant above about use of data but infection rates in Wales over the last couple of months have been consistently higher than in England, at a time when face coverings were required in more places in Wales.

Emergency response procedures (nothing to do with COVID)

Several of my clients have asked me to review their emergency response procedures this year. It has usually cropped up as part of a wider scope of task analysis work that I have been involved in.

A key point I would like to emphasise is that treating emergency response as a task is rarely appropriate. One of our main aims when we conduct task analysis is to understand how the task is or will be performed. A key feature of emergencies is that they are unpredictable. Clearly we can carry out analyses for some sample scenarios but the danger is we start to believe that the scenarios we look at will happen in reality. This can give us a very false sense of security thinking we understand something that cannot be understood.

My main interest is usability of emergency procedures. That means the procedures must be very easy to read and provide information that is useful to the people who use them. Often emergency response procedures I see are too long and wordy.

My guidance to clients is to create two documents:

- Operational guide – supporting people responding to emergencies. Develop this first.

- Management system – the arrangements needed to make sure people can respond to emergencies when they occur.

The main contents of the operational guide are a set of “Role cards,” which identify the emergency roles and list the main activities and responsibilities in an emergency, and a set of “Prompt cards” that cover specific scenario types. Each card should fit on one page if possible, but can stretch to two if that makes it more useful. The guide can include other information as appendices, but only content that would be useful to people when responding to an emergency.

There should be a role card for each emergency role and not a person’s normal role. For example, the Shift Manager may be the person who, by default, becomes the Incident Controller so it may appear the terms can be used interchangeably. But in some scenarios the Shift Manager may not available and someone else would have to take the role. Also, there will be times when an individual has to fulfil several emergency roles. For example, in the early stages of an incident the Shift Manager may act as Incident Controller and Site Main Controller until a senior manager arrives to take on the latter role.

Prompt Cards should cover every realistic scenario but you do not need a different one for every scenario. For example, you may have a number of flammable substances so may have several fire scenarios. However, if the response is the same for each you should only have one prompt card for fire. In fact, you will probably find that the same main actions have to be considered for most incidents and it makes sense to have one main Prompt Card supplemented by scenario specific cards that list any additional actions.

The management system is important, but secondary to the Operational Guide. It should identify the resources (human, equipment etc.) required for emergency response, training and competence plans including emergency exercises, communications links and technologies, cover and call-in arrangements, audit and review, management of change etc. The format is less of a concern because it is not intended as an immediate support to people when responding to emergencies, although it may be a reference source so should still be readily available.

Human Factors Engineering

Human Factors Engineering (HFE) is the discipline for applying human factors in design projects. Whilst specialists can be brought into projects to assist, their ability to influence the basic design can be limited. Much better results are achieved if discipline engineers have some knowledge of human factors, especially at the earliest stage of a project. Unfortunately HFE is perceived as concerned only with detail and so left towards the end of a project. Opportunities to integrate human factors into the design are often missed as a result.

I find that engineers are often interested in human factors but rarely get the opportunity to develop their skill in the subject. They already have a lot to do and do not have the time or energy to look for more work. This is a shame because with a little bit of awareness they can easily incorporate human factors into their design with little or no additional effort.

To support designers to improve their awareness of HFE I have created an online course. It introduces HFE and how it should be implemented in projects, from the very earliest concept/select stages though to detail design and final execution. It should be useful to anyone involved in design of process plant and equipment including process engineers, technical safety and operations representatives.

The course is presented as a series of 2-minute videos explaining how to carry out HFE in projects. It is hosted on Thinkific and the landing page is https://lnkd.in/dPeq52RU

The full course includes 35 videos (all less than 2 minutes long), splint into 7 sections, each with a quiz to check your understanding. Also, a full transcript that you can download. You have up to 6 months to work through the course.

There is a small fee (currently $30) for the full course. There is free trial of the course, intended to give you an idea of how the course is presented. You will need to create a user account to see that.

Technology and communications

I have been working with my friends at Eschbach.com, exploring the human factors of communications and how technology can help. This started with a focus on shift handover. It is easy to focus on the 10 or 15 minutes of direct communication when teams change but to be effective it has to be a continuous process performed 24 hours per day.

One of the ironies is that the busiest and most difficult days are the ones where communication at shift handover is most important. But on those days people do not have the time or energy to prepare their handover reports or even update their logs during the shift.

In our everyday life we have become accustomed to instantaneous communication with friends and family using our mobile phones. Most of us probably use WhatsApp or Messenger etc. to send important and more trivial messages to friends and family. Often these include photos because we all know an image can convey so much more than words. Wouldn’t it be good to use some of these ideas to help communication at work?

We held a couple of really good, highly interactive webinars on the subject. You can see a recording of this at https://player.vimeo.com/video/539446810.

If you would like us to run another for your company, industry group etc. let me know because we keen to continue the conversation.

See my page on technology and communication here.

ALARP

I had a series of three articles published in The Chemical Engineer with co-author Nick Wise. The main theme was on deciding whether risks are As Low As Reasonably Practicable (ALARP). Our interest was whether the various safety studies we carry out can ever demonstrate ALARP. Our conclusion was the studies are our tools to help us do this but ultimately we all have to make our own judgement. The main objective should be to satisfy ourselves that we have the information we need to feel comfortable explaining or defending our judgement to others who may have an interest.

You can access the papers at here.

Trevor Kletz compendium

Finally. I am pleased to say the Trevor Kletz compendium was published earlier this year by the Institution of Chemical Engineers (IChemE) in partnership with publisher Elsevier. I was one of the team of authors.

Trevor Kletz had a huge impact on the way the process industry viewed accidents and safety. He was one of the first people to tackle the issues and became internationally renowned for sharing his ideas on process safety. We hope that this compendium will introduce his ideas to new audiences.

The book focuses on understanding systems and learning from past accidents. It describes approaches to safety that are practical and effective and provides an engineer’s perspective on safety

Trevor Kletz was ahead of his time and many of his ideas and the process safety lessons he shared remain relevant today. The aim of the compendium was to share his work with a new audience and to prompt people who may have read his books in the past to have another look. Trevor did not expect everyone to agree with everything he said, but he was willing to share his opinions based on experience. We have tried to follow this spirit in compiling this compendium.

It is available from https://www.elsevier.com/books/trevor-kletz-compendium/brazier/978-0-12-8194478

Contact me for a discount code!

Reflections 2020

COVID-19

Let’s get this over with at the start. The pandemic has certainly caused me to think:

- Managing risks is difficult enough. Mixing in politics and public opinion magnifies this enormously;

- We have to remember what it means to be human. Restricting what we can do may reduce the immediate risk but life is for living and there has to be a balance, especially when you take into account the knock-on effects of measures taken;

- Our plans for managing crises usually assume external support and bought in services. These are far more difficult to obtain when there is a global crisis and everyone wants the same.

I have not given a great deal of thought into how these points can be applied to my work but they do give a bit of perspective and have affected many aspects of work, including management of our ‘normal’ risk.

Critical Communications

I have shared my views on shift handover several times over the years and it is still something we need to improve significantly. However, this year’s pandemic has highlighted how much we rely on person to person communication in many different ways. Infection control and social distancing disrupted this greatly. Just look at how many Skype/Teams/Zoom calls you have had this year compared to last!

My concern is that companies are failing to recognise the importance of communication or the fact that a lot of it takes place informally. You can hold very successful meetings over the internet but with people working separately you miss all those chance encounters and opportunities to have a chat when passing. These are the times when more exploratory discussions take place. They are safe times when you can ask daft questions and throw in wild ideas. Even if most of the time is spent talking about the weather or football, they help you get to know your colleagues better; fostering teamwork.

I posted an article on LinkedIn to highlight the issues with communication and COVID-19, which you can access at https://www.linkedin.com/pulse/dont-overlook-process-safety-andy-brazier. My concern was that companies had implemented the measures they needed to handle the personal health aspects of the pandemic but were not considering the knock-of effects on communication. This is a classic management of change issue. It is easy to focus on what you want or need to do. But you always need to be aware of the unintended consequences.

Posting my article led to discussions with software company Eschbach and we wrote a whitepaper together. You can download it from my website.

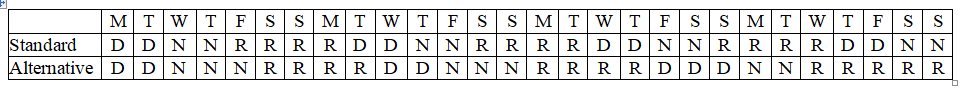

An illustration of how companies do not always think about communication has arisen when looking at shift patterns. It is quite right that risks of fatigue from working shifts have to be managed, but that is not the only concern. For 12 hour shifts a fairly standard pattern is to work 2 days, 2 nights and then have 4 days off. This is simple and does well on fatigue calculations. The first day shift rotates through the days. This means that at one part of the cycle the first day is on a Saturday and the following week it is on a Sunday. The problem is that at the weekend the day staff are not present, so if important information is missed at the handover there is no one available to fill in the gaps or answer questions.

Alternative shift patterns are available that ensure the first day shift always happens on a weekday when the day workers are also present. The patterns are bit more complicated and may involve working one or two additional shifts before having a break, so don’t score so well in the fatigue calculations. I am not saying that everyone should change to a shift pattern like that, but I am pointing out that we need to give more recognition to communication and put more effort into supporting it.

The table below shows a comparison of Standard vs an Alternative shift pattern (days of the week along the top).

Control room design

Last year’s news was the publication of the 3rd edition of EEMUA 201 “Control Rooms: A Guide to their Specification, Design, Commission and Operation.” Unfortunately, it is not available free, unless you, or your employer, are a member of EEMUA. However, a free download is now available at https://www.eemua.org/Products/Publications/Checklists/EEMUA-control-rooms-checklist.aspx that includes a high level Human Factors Integration Plan template that can be used for new or modification control room projects (actually it is not a bad template for any type of project). Also, a checklist for evaluating control rooms either at the design or operational stages. I have used the checklist a number of times this year and am pleased to confirm that it really is very effective and useful. You should really use it with the 201 guide, but even on its own the checklist shows you what to consider and it will probably be a useful way of persuading your employer to but a copy of EEMUA 201.

On the subject of control rooms I published another article on LinkedIn this year titled “Go and tidy your (Control) room.” I used COVID-19 to reinforce the message I often give my clients about the state of their control rooms. My opinion is that we need to make sure our control room operators are always at the top of their game and having a pleasant and healthy place to work can help this. Unfortunately the message often seems to fall on deaf ears. Access the article at https://www.linkedin.com/pulse/go-tidy-your-control-room-andy-brazier/

Internet of Things (IoT)

This is a popular buzz word at the moment. The idea is that over the decades technology has given us more and more devices. Recently they have become smarter and so perform more functions autonomously. But there is even greater potential if they can be connected to each other, especially if there is an internet or cloud based system that can perform higher level functions.

Whilst I have a passing interest in the technology I am far more interested in how people fit into this future. The normal idea seems to be that people are just one of the ‘things’ that can be connected to the devices via the cloud. I have a number of problems with this. Firstly, ergonomics and human factors has shown us that technology often fails to achieve its potential because people cannot use it effectively or simply don’t want to. Perhaps more significantly, I feel that the current focus on the technology means that the potential to harness human strengths will be missed.

It is true that simple systems can be automated reasonably easily, and if they are used widely the investment in developing the technology can be justified. But automating more complicated systems is far more difficult. The driverless or autonomous car gives us a very good example. How many billions of pounds/dollars have already been spent on developing that technology? There will probably be a good return on this investment in the end because once it is working effectively it will result in many thousands or millions of car sales. Industrial and process systems are complicated and tend to be unique. It is unthinkable that such massive investment will be made into developing automation that can handle every mode of operation and handle every conceivable event. This is why we still need people, and will do for many years to come.

Although the focus is currently on the technology, I think the greatest advances are going to come from using IoT to support people rather than replace them. By understanding what people can do better than technology we will achieve much more reliable and efficient systems.

Loss Prevention Bulletin

Good news for all members of the Institution of Chemical Engineers (IChemE) is that they will have free access to Loss Prevention Bulletin from January. I believe this is a very significant step forward, making practical and accessible information about process safety readily available to so many more people. I have had a couple of papers published in the bulletin this year. They are both use slightly quirky but tragic case studies to illustrate important safety messages. You can download them at

http://abrisk.co.uk/papers/2020%20lpb274_pg07%20Abergele%20Train%20Crash.pdf

http://abrisk.co.uk/papers/2020%20lpb275_pg11%20Dutch%20surfers.pdf

Reflections 2019

Control room design

The great news is that the new, 3rd edition of EEMUA 201 was published this year. It has been given the title “Control Rooms: A Guide to their Specification, Design, Commission and Operation.” I was the lead author of this rewrite, and it was fascinating for me to have the opportunity to delve deeper into issues around control room design; especially where theory does not match the feedback from control room operators.

I would love to be able to send you all a copy of the updated guide but unfortunately it is a paid for publication (free for some members of EEMUA members). However, I have just had a paper published in The Chemical Engineer describing the guide and this is available to download free at https://www.thechemicalengineer.com/features/changing-rooms/.

Now it has been published my advice about how to use the updated guide is as follows:

- If you are planning a new control room or upgrading or significantly changing an existing one you should be using the template Human Factors Integration Plan that is included as Appendix 1. This will ensure you consider the important human factors and follow current good practice;

- If you have any form of control room and operate a major hazard facility you should conduct a review using the checklist that is included as Appendix 2. This will allow you to identify any gaps you may have between your current design and latest good practice.

If you have any comments or questions about the updated guide please let me know.

Quantifying human reliability

It has been a bit of surprise to me that human reliability quantification has cropped up a few times this year. I had thought that there was a general consensus that it was not a very useful thing to attempt

One of the things that has prompted discussions has come from the HSE’s guidance for assessors, which includes a short section that starts “When quantitative human reliability assessment (QHRA) is used…”. This has been interpreted by some people to mean that quantification is an expectation. My understanding is that this is not the case, but in the recognition that it still happens HSE have included this guidance to make sure any attempts to quantify human reliability are based on very solid task analyses;

My experience is that a good quality task and human error (qualitative) analysis provides all the information required to determine whether the human factors risks are As Low As Reasonably Practicable (ALARP). This means there is no added value in trying to quantify human reliability and the effort it requires can be counter-productive, particularly as applicable data is sparse (non-existent). Maybe the problem is that task analysis is not considered to be particularly exciting or sexy? Also, I think that a failure to fully grasp the concept of ALARP could be behind the problem.

My view is that demonstrating risks are ALARP requires the following two questions to be answered:

- What more can be done to reduce risks further?

- Why have these things not been done?

Maybe the simplicity of this approach is putting people off and they relish the idea of using quantification to conduct some more ‘sophisticated’ cost benefit analyses. But I really do believe that sticking to simple approaches is far more effective.

Another thing that has prompted discussions about quantification is that some process safety studies (particularly LOPA) include look-up tables of generic human reliability data. People feel compelled to use these to complete their assessment.

I see the use in other process safety studies (e.g. LOPA) as a different issue to stand alone human reliability quantification. There does seem to be some value in using some conservative figures (typically a human error rate of 0.1) to allow the human contribution to scenarios to be considered. If the results achieved do not appear sensible a higher human reliability figure can be used to determine how sensitive the system is to human actions.

It is possible to conclude that the most sensible approach to managing risks is to place higher reliance on the human contribution. If this is the case it is then necessary to conduct a formal and detailed task analysis to justify this; and to fully optimise Performance Influencing Factors (PIF) to ensure that this will be achieved in practice.

It is certainly worth looking through your LOPA studies to see what figures have been used for human reliability and whether sensible decisions have been made. You may find you have quite a lot of human factors work to do!

Maintaining bursting discs and pressure safety valves

I am pleased to say that my paper titled “Maintenance of bursting disks and pressure safety valves – it’s more complicated than you think.” Was published in the Loss Prevention Bulletin in 2019. It highlights that these devices are often our last line of defence but we have minimal opportunities to test them in situ and so have to trust they will operate when required. However, there are many errors that can occur during maintenance, transport, storage and installation that can affect their reliability. Access my page on relief valve and bursting discs here.

Unfortunately I have still not written my next paper in the series, which will be on testing of Safety Instrumented Systems (SIS). It is clear to me that often the testing that takes place is not actually proving reliability of the system. Perhaps I will manage it in 2020.

However, I did have another paper published in The Chemical Engineer. It is actually a reprint of a paper published in Loss Prevention Bulletin in 2013, so many of you have seen it before. It is about process isolations being more complicated than you think. I know this is still a very relevant subject. Access my page on process isolations here.

Inherent Safety

I have been aware of the general concept of Inherent Safety for a long time, with Trevor Kletz’s statement “what you don’t have can’t leak” explaining the main idea so clearly. However, I have looked a bit more deeply into the concept in recent months and am now realising it is not as simple as I thought.

One thing that I now understand is that an inherently safe solution is not always the safest option when all risks are taken into account. The problem is that it often results in risk being transferred rather than eliminated; resulting in arrangements that are more difficult to understand and control.

I am still sure that inherent safety is very important but maybe it is not thought about carefully enough. The problem seems to be a lack of tools and techniques. I am aware that it is often part of formal evaluations of projects at the early Concept stage (e.g. Hazard Study 0) but I see little evidence of it at later stages of projects or during operations and maintenance.

I have a couple of things going on at the moment where I am hoping we will develop the ideas about inherent safety a bit. They are:

- I am part of a small team writing a book – a Trevor Kletz compendium. We are aiming to introduce a new audience to his work and remind others who may not have looked at it for a while that much of it is still very relevant. A second, equally important aim is to review some of Trevor’s ideas in a current context (including inherent safety) and to use recent incidents to illustrate why they still so important. We hope to publish late 2020, so watch this space.

- I am currently working on a paper for Hazards 30 with a client on quite an ambitious topic. It will be titled “Putting ‘Reasonably Practicable’ into managing process safety risks in the real world.” Inherent safety is an integral part of the approach we are working on.

Reflections 2018

Piper Alpha

We passed the 30 year anniversary of this disaster. It is probably the event that has most affected my career as it highlighted so many human factors and process safety issues. I know we have a better understanding of how accidents like Piper Alpha happen and how to control the risks but it is easy for things to get forgotten over time. I wrote two papers for the anniversary edition of Loss Prevention Bulletin. One looked at the role of ‘shared isolations’ (where an isolation is used for several pieces of work). The other was concerned with shift handover, which is one area where I worry that industry has still not properly woken up to.

Control room design

One of my main activities this year has been to rewrite the EEMUA 201 guidance document on design of control rooms and human machine interfaces in the process industry. I have investigated a range of aspects of design and made a point of getting input from experienced control room operators, control room designs, ergonomists and regulators. This has highlighted how important the design is for the operator to maintain the situational awareness they need to perform their job safely and efficiently; and to detect problems early to avoid escalation. This is not just about providing the right data in the right format; but also making sure the operator is healthy and alert at all times so that they can handle the data effectively. A complication is that control rooms are used by many different people who have different attributes and preferences. I found that currently available guidance did not always answer the designers’ questions or address the operators’ requirements but I hope that the new version of EEMUA 201, which will be published in 2019, will make a valuable contribution.

Arguably a bigger issue than original design is the way control rooms are maintained and modified over their lifetime. There seems to be a view that adding “just another screen” or allowing the control room to become storage area for any paperwork and equipment that people need a home for is acceptable. The control room operator’s role is highly critical and any physical modification or change to the tasks they perform or their scope of responsibility can have a significant impact. We, quite rightly, put a lot of emphasis on designing effective control rooms and so any change needs to be assessed and managed effectively taking into account all modes of operation including non-routine and emergency situations.

Safety critical maintenance tasks

Whilst I have carried out safety critical task analysis for many operating tasks over the years it is only more recently that I have has the opportunity to do the same for maintenance tasks. This has proven to be very interesting. A key difference when compared to operations is that most maintenance tasks are performed without reference to detailed procedures and there can be almost total reliance on competence of the technicians. In reality only a small proportion of maintenance tasks are safety critical, but analysis of these invariably highlights a number of potentially significant issues.

I have written a paper titled “Maintenance of bursting disks and pressure safety valves – it’s more complicated than you think.” It will be published in the Loss Prevention Bulletin in 2019. This highlights that the devices are often our last line of defence but we have no way of testing them in situ and so have to trust they will operate when required. However, there are many errors that can occur during maintenance, transport, storage and installation that can affect their reliability.

Another example of a safety critical maintenance task is testing of safety instrumented systems. This is likely to be my next paper because it is clear to me that often the testing that takes place is not actually proving reliability of the system. Another task I have looked at this year was fitting small bore tubing. It was assumed that analysing this apparently simple task would throw up very little but again a number potential pitfalls were identified that were not immediately obvious.

Safety 2/Safety Different

I am increasingly bemused by this supposedly “new” approach to safety. The advocates tell us that focussing on success is far better than the “traditional” approach to safety, which they claim is focussed mainly on failure (i.e. accidents). The idea is that there are far more successes than failures so far more can be learnt. Spending more time on finding out how work is actually done instead of assuming or imagining we know what really happens is another key feature of these approaches.

I fully agree that there are many benefits of looking at how people do their job successfully and learning from that. But I do not agree that this is new. The problem seems to be that people promoting Safety 2/Different have adopted a particular definition of safety, which is one that I do not recognise. They suggest that safety has always been about looking at accidents and deciding how to prevent them happening again. There seems to be little or no acknowledgement of the many approaches taken in practice to manage risks. I certainly feel that I have spent most of my time in my 20+ year career understanding how people do their work, understanding the risks and making practical suggestions to reduce those risks, and have observed this in nearly every place I have ever worked. As an example, permit to work systems have been an integral part of the process industry for a number of decades. They encourage people at the sharp end to understand the tasks that are being performed, assessing the risks and deciding how the work can be carried out successfully and safely. This seems to fulfil everything that Safety 2/Different is claiming achieve.

My current view is that Safety 2/Different is another useful tool in our safety/risk management toolbox. We should use it when it suits, but in many instances our “traditional” approaches are more effective. Overall I think the main contribution of Safety 2/Different is that it has given a label to something that we may have done more subconsciously in the past, and by doing that it can assist by prompting us to look at things a bit differently in order to see if there are any other solutions.

Bow tie diagrams

I won’t say much about these as I covered this in last year’s Christmas email with an accompanying paper. But I am still concerned that bow tie diagrams are being oversold as an analysis technique. They offer an excellent way of visualising the way risks are managed but they are only effective if they are kept simple and focussed.

And finally

I had a paper published in Loss Prevention bulletin explaining how human bias can result in people have a misperception about how effective procedures can be at managing risk. This bias can affect people when investigating incidents and result in inappropriate conclusions and recommendations. The paper was provided as a free download by IChemE.

Reflections 2017

I have written a few papers this year. I have decided to share two this year

1. Looking at the early stages of an emergency, pointing out that it is usually this is usually in the hands of your process operators, often with limited support. http://abrisk.co.uk/papers/2017%20LPB254pg09%20-%20Emergency%20Procedures.pdf

2. My views on Bowtie diagrams, which seem to be of great interest at the moment. I hope this might create a but of debate. http://abrisk.co.uk/papers/Bowties&human_factors.pdf

My last two Christmas emails included some of my ‘reflections’ of the year. When I came to write some for 2017 I found that the same topics are being repeated. But interestingly I have had the opportunity to work on a number of these during the year with some of my clients. As always, these are in no particular order.

Alarm management

This is still a significant issue for industry. But it is a difficult one to address. There really is no short cut to reducing nuisance alarms during normal operations and floods of alarms during plant upsets. Adopting ‘Alerts’ (as defined in EEMUA 191) as an alternative to an alarm appears to be an effective ‘enabler’ for driving improvements. It provides a means of dealing with something they think will be ‘interesting’ to an operator, but that is not so ‘important.’

During the year I have provided some support to a modification project. I was told the whole objective was simplification. But a lot of alarms were being proposed, with a significant proportion being given a high priority. Interestingly, no one admitted to being the person who had proposed these alarms, they had just appeared during the project, and it turned out the project did not have an alarm philosophy. We held an alarm review workshop and managed to reduce the count significantly. Some were deleted and others changed to alerts instead. The vast majority of the remaining alarms were given Low Priority.

Process isolations

I have had the chance to work with a couple of clients this year to review the way they implement process isolations. This has reinforced my previous observations that current guidance (HSG 253) is often not followed in practice. But having been able to examine some examples in more detail has become apparent that in many cases it is simply not possible to follow the guidance, and is some cases it would introduce more risk. The problem is that until we did this work people had ‘assumed’ that their methods were fully compliant both with HSG 253 and with their in-house standards, which were usually based on the same guidance.

Interlocks

I presented a paper at this year’s Hazards 27 on this subject, suggesting that keeping interlocks to the minimum and as simple as possible is usually better, whereas the current trend seems to be for more interlocks with increasing complexity. My presentation seemed to be well received, with several people speaking to me since saying they share my concerns. But, without any formal guidance on the subject it is difficult to see how a change of philosophy can be adopted in practice.

Human Factors in Projects

I presented a paper at EHF2017 on the subject of considering human factors in projects as early as possible. To do this human factors people need to be able to communicate effectively with other project personnel, most of whom will be engineers. Also, we need to overcome the widely held view that nothing useful can be done until later in a project when more detailed information is available.